Section Branding

Header Content

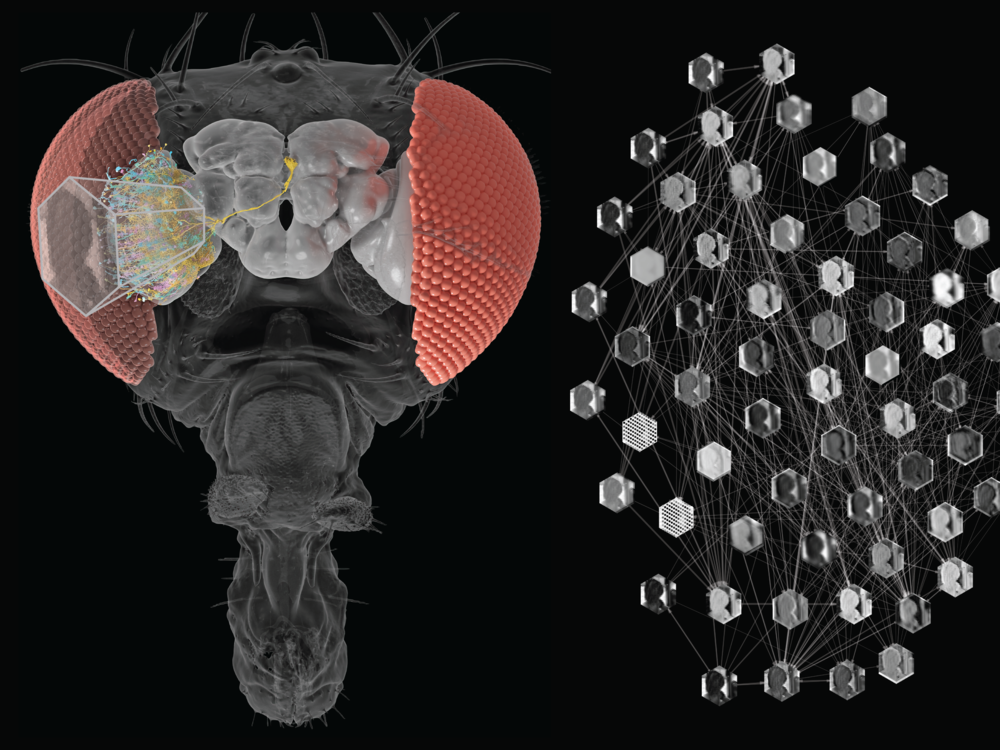

AI met fruit fly, and a better brain model emerged

Primary Content

Scientists have created a virtual brain network that can predict the behavior of individual neurons in a living brain.

The model is based on a fruit fly’s visual system, and it offers scientists a way to quickly test ideas on a computer before investing weeks or months in experiments involving actual flies or other lab animals.

“Now we can start with a guess for how the fly brain might work before anyone has to make an experimental measurement,” says Srini Turaga, a group leader at the Janelia Research Campus, a part of the Howard Hughes Medical Institute (HHMI).

The approach, described in the journal Nature, also suggests that power-hungry artificial intelligence systems like ChatGPT might consume much less energy if they used some of the computational strategies found in a living brain.

A fruit fly brain is “small and energy efficient,” says Jakob Macke, a professor at the University of Tübingen and an author of the study. “It’s able to do so many computations. It’s able to fly, it’s able to walk, it’s able to detect predators, it’s able to mate, it’s able to survive—using just 100,000 neurons.”

In contrast, AI systems typically require computers with tens of billions of transistors. Worldwide, these systems consume as much power as a small country.

“When we think about AI right now, the leading charge is to make these systems more power efficient,” says Ben Crowley, a computational neuroscientist at Cold Spring Harbor Laboratory who was not involved in the study.

Borrowing strategies from the fruit fly brain might be one way to make that happen, he says.

A model based on biology

The virtual brain network was made possible by more than a decade of intense research on the composition and structure of the fruit fly brain.

Much of this work was done, or funded, by HHMI, which now has maps that show every neuron and every connection in the insect’s brain.

Turaga, Macke and PhD candidate Janne Lappalainen were part of a team that thought they could use these maps to create a computer model that would behave much like the fruit fly’s visual system. This system accounts for most of the animal’s brain.

The team started with the fly’s connectome, a detailed map of the connections between neurons.

“That tells you how information could flow from A to B,” Macke says. “But it doesn’t tell you which [route] is actually taken by the system.”

Scientists have been able to catch glimpses of the process in the brains of living fruit flies but they have no way to capture the activity of thousands of neurons responding to signals in real time.

“Brains are so complex that I think the only way we will ever be able to understand them is by building accurate models,” Macke says.

In other words, by simulating a brain, or part of a brain, on a computer.

So the team decided to create a model of the brain circuits that allow a fruit fly to detect motion, like the approach of a fast moving hand or fly swatter.

“Our goal was not to build the world’s best motion detector, but to find the one that does it the way the fly does.”

The team started with virtual versions of 64 types of neurons, all connected the same way they would be in a fly’s visual system. Then the network “watched” video clips depicting various types of motion.

Finally, an artificial intelligence system was asked to study the activity of neurons as the video clips played.

Ultimately, the approach yielded a model that could predict how every neuron in the artificial network would respond to a particular video. Remarkably, the model also predicted the response of neurons in actual fruit flies that had seen the same videos in earlier studies.

A tool for brain science and AI

Although the paper describing the model has just come out, the model itself has been available for more than a year. Brain scientists have taken note.

“I’m using this model in my own work,” says Cowley, whose lab studies how the brain responds to external stimuli. He says the model has helped him gauge whether ideas are worth testing in an animal.

Future versions of the model are expected to extend beyond the visual system and to include tasks beyond detecting motion.

“We now have a plan for how to build whole-brain models of brains that do interesting computations,” Macke says.